Edge computing in AI applications introduces a groundbreaking approach to data processing, propelling technology into a realm of unparalleled efficiency and speed. As we delve into the intricacies of this innovative fusion, a world of possibilities unfolds before us, promising a future where AI capabilities soar to new heights.

Get ready to explore the dynamic landscape where cutting-edge technology meets the forefront of artificial intelligence, revolutionizing the way we harness data for optimal performance and precision.

Overview of Edge Computing in AI Applications

Edge computing refers to the practice of processing data closer to where it is generated rather than relying on a centralized data center, such as in traditional cloud computing. In the context of AI applications, edge computing allows for real-time data processing and analysis directly on the device or at the edge of the network, enabling faster decision-making and reducing latency.One of the key advantages of edge computing in AI applications is the ability to handle data locally, without the need to constantly transfer large amounts of data to the cloud for processing.

This results in quicker response times and improved efficiency in AI algorithms, especially in scenarios where low latency is critical.

Examples of AI Applications Benefiting from Edge Computing

- Autonomous vehicles: Edge computing enables self-driving cars to process sensor data in real-time, allowing for faster decision-making and reaction to changing road conditions.

- Smart home devices: AI-powered smart home devices can analyze data locally to personalize user experiences and optimize energy consumption without relying on cloud resources.

- Healthcare monitoring: Edge computing in AI applications for healthcare allows for continuous monitoring of patient data, ensuring timely intervention and personalized treatment plans.

Benefits of Integrating Edge Computing in AI

Edge computing offers numerous advantages when integrated into AI applications. By bringing computation closer to the data source, it enhances speed, efficiency, and cost-effectiveness in AI tasks.

Improved Speed and Efficiency

Edge computing significantly reduces latency by processing data closer to where it is generated. This proximity allows AI algorithms to make real-time decisions without relying on a distant cloud server. As a result, tasks are executed faster, leading to quicker responses and improved overall efficiency in AI operations.

Cost-Effectiveness

Deploying edge computing in AI systems can lead to cost savings in various ways. By reducing the need for constant data transmission to and from the cloud, edge computing minimizes bandwidth usage and associated costs. Additionally, with local processing capabilities, organizations can optimize resource allocation and minimize expenses related to cloud services. This cost-effective approach not only benefits businesses but also enhances the scalability and sustainability of AI applications.

Challenges and Limitations of Edge Computing in AI

When it comes to implementing edge computing in AI applications, there are several challenges and limitations that need to be considered. These factors can impact the efficiency and effectiveness of utilizing edge computing for AI tasks.

Connectivity Issues

One of the common challenges faced with edge computing in AI is connectivity issues. Since edge devices operate on the edge of the network, they may encounter network latency, packet loss, or unreliable connections. This can affect the real-time processing and decision-making capabilities of AI algorithms.

Resource Constraints

Edge devices often have limited resources in terms of processing power, memory, and storage. This can pose a limitation when running complex AI models or algorithms that require significant computational resources. As a result, the performance of AI applications on edge devices may be compromised.

Security Concerns

Security is a major concern when it comes to edge computing in AI systems. Edge devices are more vulnerable to cyber-attacks compared to centralized cloud servers. Data privacy, integrity, and confidentiality can be at risk when processing sensitive information on edge devices. Implementing robust security measures is crucial to mitigate these risks.

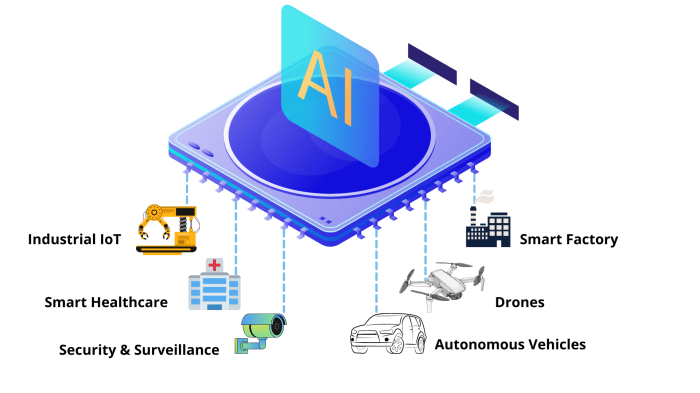

Edge Computing Technologies for AI: Edge Computing In AI Applications

Edge computing technologies play a crucial role in enabling AI applications to run efficiently and effectively. By bringing computation closer to the data source, edge computing reduces latency and improves overall performance. Let’s explore the various technologies used in edge computing for AI applications.

IoT Devices in Edge Computing

IoT devices are instrumental in enabling edge computing for AI. These devices collect and transmit vast amounts of data from the physical world to the edge servers for processing. By leveraging IoT devices, edge computing can analyze real-time data and make decisions faster, enhancing the capabilities of AI applications.

Edge Servers and Edge Analytics, Edge computing in AI applications

Edge servers are critical components of edge computing infrastructure. These servers are located close to the IoT devices and are responsible for processing data in real-time. Edge analytics, on the other hand, involves analyzing data at the edge to extract valuable insights and make informed decisions. By combining edge servers and edge analytics, AI applications can leverage the power of real-time data processing to enhance their capabilities.